Wavesurfer.js: MultiCanvas Renderer

This is my first blog post in quite a while. I've been busy working away on my LED animation sequencing software and I'm really pleased with the results so far (a future blog post will cover the software in detail).

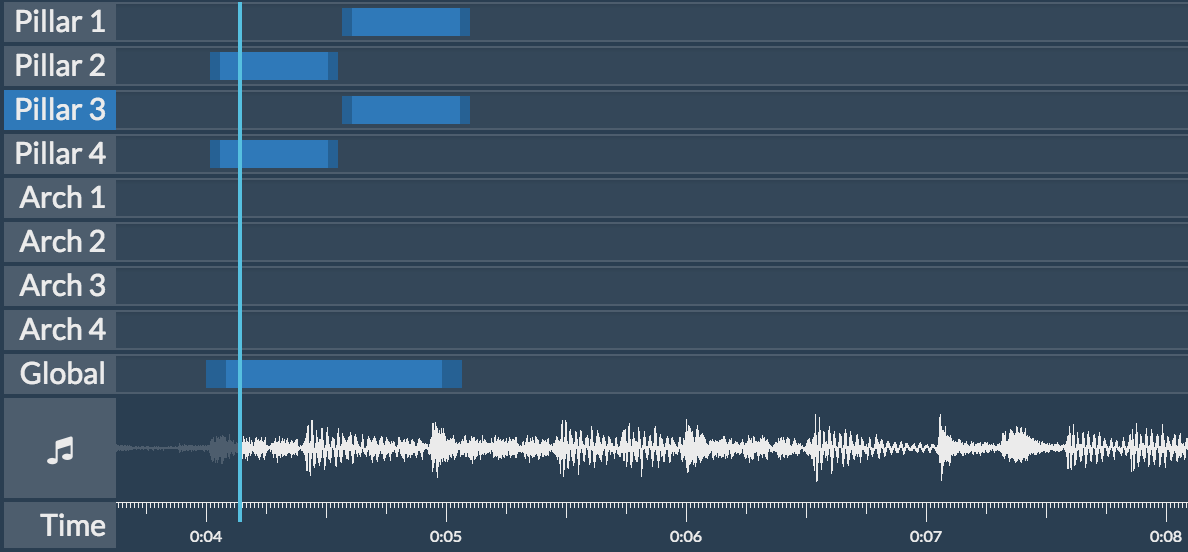

One feature of the software is an audio waveform that serves the dual purpose of assisting with animation timings and facilitating navigation of the animation timeline:

Wavesurfer.js

To implement the timeline, I used the excellent wavesurfer.js. Out of the box, wavesurfer comes with navigation, audio playback, waveform generation, and a bunch of other configurations and events to hook into.

Browser woes

The one issue I encountered was that large waveforms wouldn't render. After some research, I found that this was a browser limitation.

The solution

To work around this, I implemented a MultiCanvas renderer for wavesurfer, which has since been accepted into the repository and released under version 1.1.0. This was my first ever contribution to an open-source project, so I was pretty stoked to have it accepted and receive feedback from others.

The MultiCanvas renderer works by stacking multiple adjacent canvases, the width of which can be controlled by the maxCanvasWidth wavesurfer property. The renderer itself can by used by setting the renderer wavesurfer property to 'MultiCanvas'.

Challenges

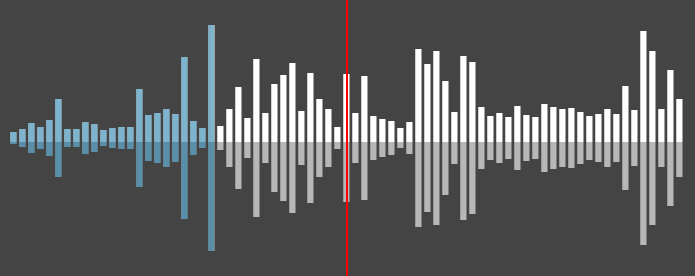

Wavesurfer supports two modes of rendering: lines (a traditional waveform) and bars (looks like a histogram, using average frequency values for each bar).

The below image shows a wavesurfer timeline that uses bars. I've added a red line to indicate a the end of one canvas and the beginning of the next. Notice that a bar sits right on this line.

This complicates things, as the two parts of the bar need to be rendered in different canvases.

My solution for this was to wrap the canvas fillRect calls, and pass them through the following algorithm:

For each canvas:

Calculate intersection between canvas bounds and waveform bar coordinates

If an intersection exists, render the intersection to the canvasThe implementation for the line waveform was similar. I simply rendered the amplitude data for each canvas until I reached the end of that canvas, then filled out the line and repeated with the next canvas.

After my initial implementation, I noticed some thin gaps between the canvases. I solved this by adding a small overlap (1px * device ratio).